A typical scene at a car enthusiasts motorhead event involves souped-up cars with their hoods propped open and a bunch of grease monkeys gathered around staring at the powerful V12 combustion engine. With the migration to electric cars it is probably a scene that will start to phase out over time. A bit like classical asymmetric cryptography!

There has been a lot of coverage on the anticipated advance of quantum computers: the boon they will bring to medicine, science, and chemistry; and the curse on IT security, with Shor’s algorithm sounding the death knell for traditional classic asymmetric cryptography, which underpins the security for most of what we do on the internet. For this blog post I decided to focus on some of the post-quantum cryptography (PQC) related activities that are going on as we speak, under-the-hood. I’m talking specifically about the Internet Engineering Task Force (IETF), who are tasked with preparing the public internet for a post-quantum world, figuring out how to retool and migrate the protocols and standards built on classical algorithms that we rely on extensively today.

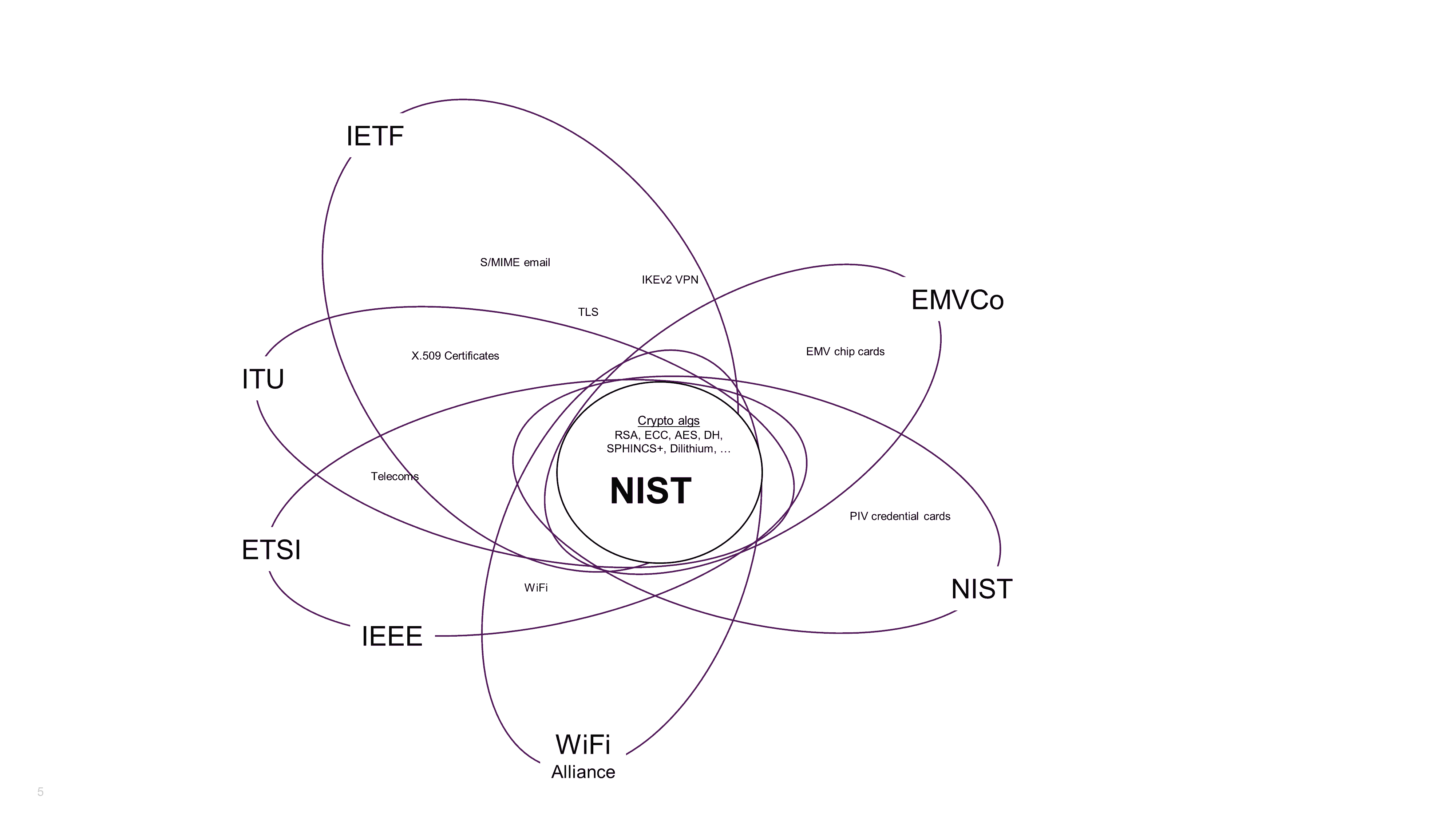

Figure 1: NIST forms the nucleus of the PQC migration, surrounded by a shell of other standards bodies

First to provide a bit of context, it is worth reviewing where the IETF fits in to the PQC story. At the nucleus of figure 1 is National Institute of Standards and Technology (NIST). Their ongoing competition tasked with identifying and standardizing on the PQC primitives has been well-documented. You might already be au fait with some of the short-listed PQC algorithms including SPHINCS+, CRYSTALS-Dilithium and CRYSTALS-Kyber, as well as XMSS and LMS, which are already NIST standards [SP 800-208]. The output of the NIST’s work will then be used by a shell of orbiting standards bodies such as the European Telecommunications Standards Institute (ETSI), Institute of Electrical and Electronic Engineers (IEEE), EMVCo, International Telecommunication Union (ITU), and the Wi-Fi Alliance®.

Outside of the NIST’s area of responsibility, updating the protocols and technologies that rely on cryptography deemed vulnerable to quantum attacks falls to each of the respective standards bodies to make them ready for a post-quantum world.

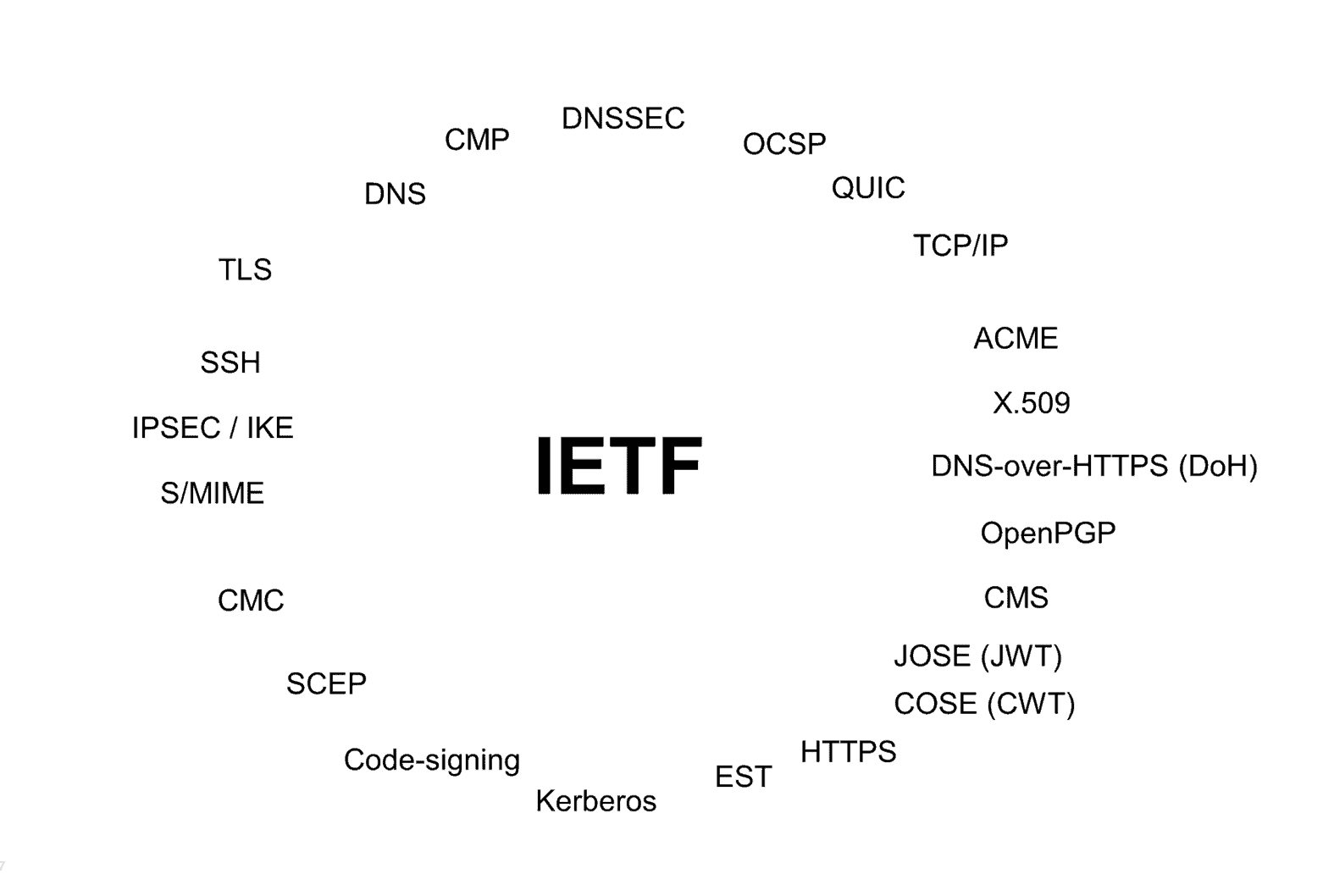

Consider the IETF, who own the specifications for many of the internet’s cryptographic and security protocols:

Figure 2: Some of the security protocols and standards maintained by the IETF

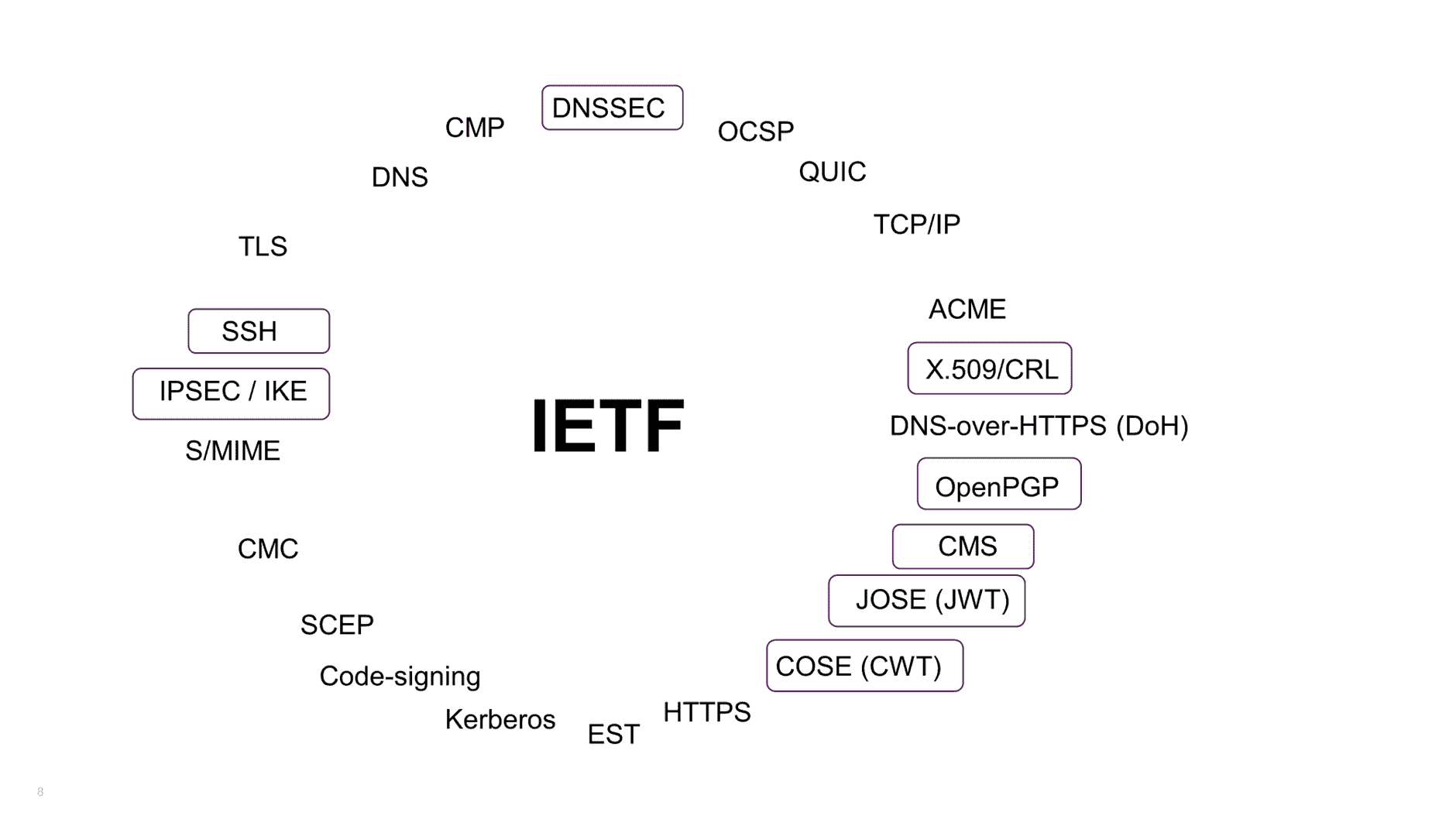

You might not recognize all of these protocols, but you should be at least vaguely familiar with TLS, SSH, HTTPS, TCP/IP, and DNSSEC, fundamental building blocks for internet communication and security. Fortunately, there is an opportunity to reuse and recycle. The protocols highlighted below are those that need to be retooled with PQC algorithms. The remaining protocols actually don’t need updating. They get their cryptography for free by embedding another PQ-safe protocol. So the list is perhaps less daunting than it first appears but still some non-trivial work lies ahead.

Figure 3: IETF security protocols and standards with those requiring PQC retooling highlighted

A couple of my Entrust colleagues, Mike Ounsworth and John Gray, are fully embedded in the IETF Working Groups. They have been working for the past 5 years on defining the standards for composite digital certificates, utilizing a combination of classical and PQ cryptography. Last time I counted they have authored or co-authored 13 papers that will eventually define and specify how communication protocols operate in a post-quantum world – pretty cool stuff! They reported back from their recent IETF 116 session in Tokyo/Yokohama, Japan, where the general MO is “Hurry up and wait.” In essence it means get drafts of these protocols started, then put them in a holding pattern until final NIST specs for CRYSTALS-Dilithium, FALCON, SPHINCS+, and CRYSTALS-KYBER are standardized. As I chatted with my colleagues, one of the things that was clear is their appreciation for just how well-crafted and versatile the classical algorithms and APIs were. I’m referring to those which came from the pioneers of modern-day cryptography such as public key cryptography defined in the ’70s by Rivest, Shamir, and Adleman (RSA), Diffie, Hellman, and Merkle, and others. I imagine it is the equivalent of modern-day car designers gazing under the hood of a vintage E-Type Jaguar or Ferrari in appreciation of its design, power, and finesse. We’re talking about the nuts and bolts of cryptographic algorithms and APIs here: key transport, key agreement, key encapsulation mechanisms (KEMs), double ratchets, and 0.5 (round-time trips) RTTs! What the IETF working groups are finding is that some of the classic algorithm and mechanism properties are very hard to retain when migrating to PQC equivalents. It is proving challenging even for some of the sharpest minds in the industry. However, in a slow and steady pace they are making good progress.

One of the initiatives I learned about from Entrust’s participation in IETF 116 was our support for the new IETF PQUIP (Post-Quantum Use In Protocols) working group. The name PQUIP, a post-quantum spin on the word “equip” was actually coined by my colleague Mike Ounsworth. The PQUIP group was founded in Jan 2023 and recently had its first meeting at IETF 116. The PQUIP charter is here: https://datatracker.ietf.org/wg/pquip/about/ I know my Entrust colleagues are super proud to be founding and active members of this group focused on bringing the entire internet community together to share knowledge and best practices for how to integrate the new PQC algorithms into the myriad IT security protocols that we rely on every day. This is about mathematicians, subject matter experts, software engineers, and cryptographers coming together across academia and industry to pave the way for a smooth transition to PQ. Architecting the largest cryptographic migration that humanity has ever done is no small feat! It involves juggling security against ease-of-deployment for IT admins through often politics-ridden public discussion groups while under a tight and unforgiving deadline!

So far, the primary output of PQUIP is this document cataloging all of the PQC efforts across the IETF. Check out https://github.com/ietf-wg-pquip/state-of-protocols-and-pqc to see how much design work is involved. Also note the listing at the bottom “Security Area protocols with no PQC-specific action needed” referring to the protocols that will get PQC for free.

Eminent mathematician and PQC academic Michele Mosca when asked for his advice to enterprises on preparing for PQ said “make this about lifecycle management not crisis management.” I think it is fair to say that the IETF working groups are implementing those wise words, figuring out the tricky low-level stuff while we have time. I look forward to seeing the output of their good work in the coming months and years.